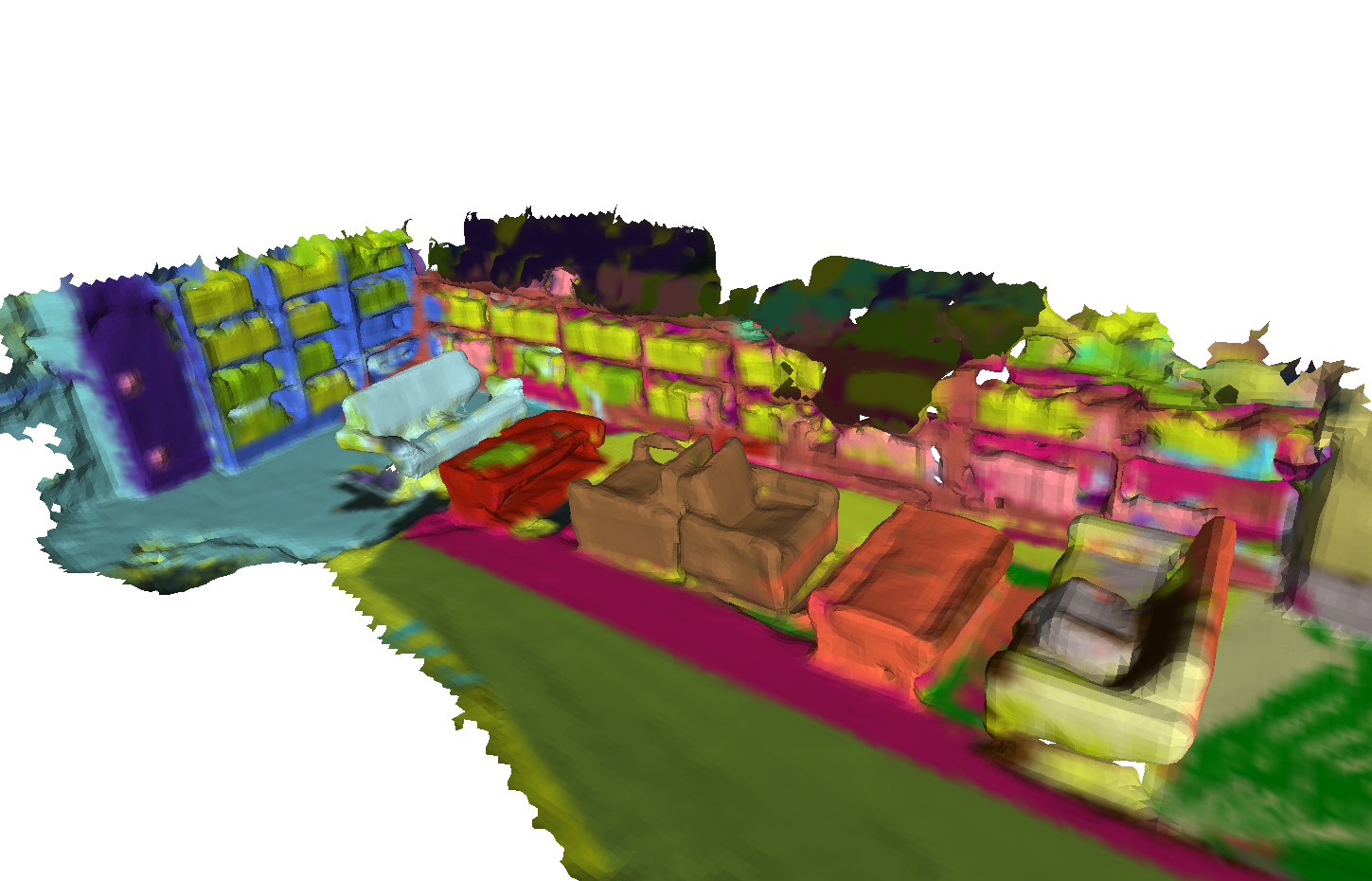

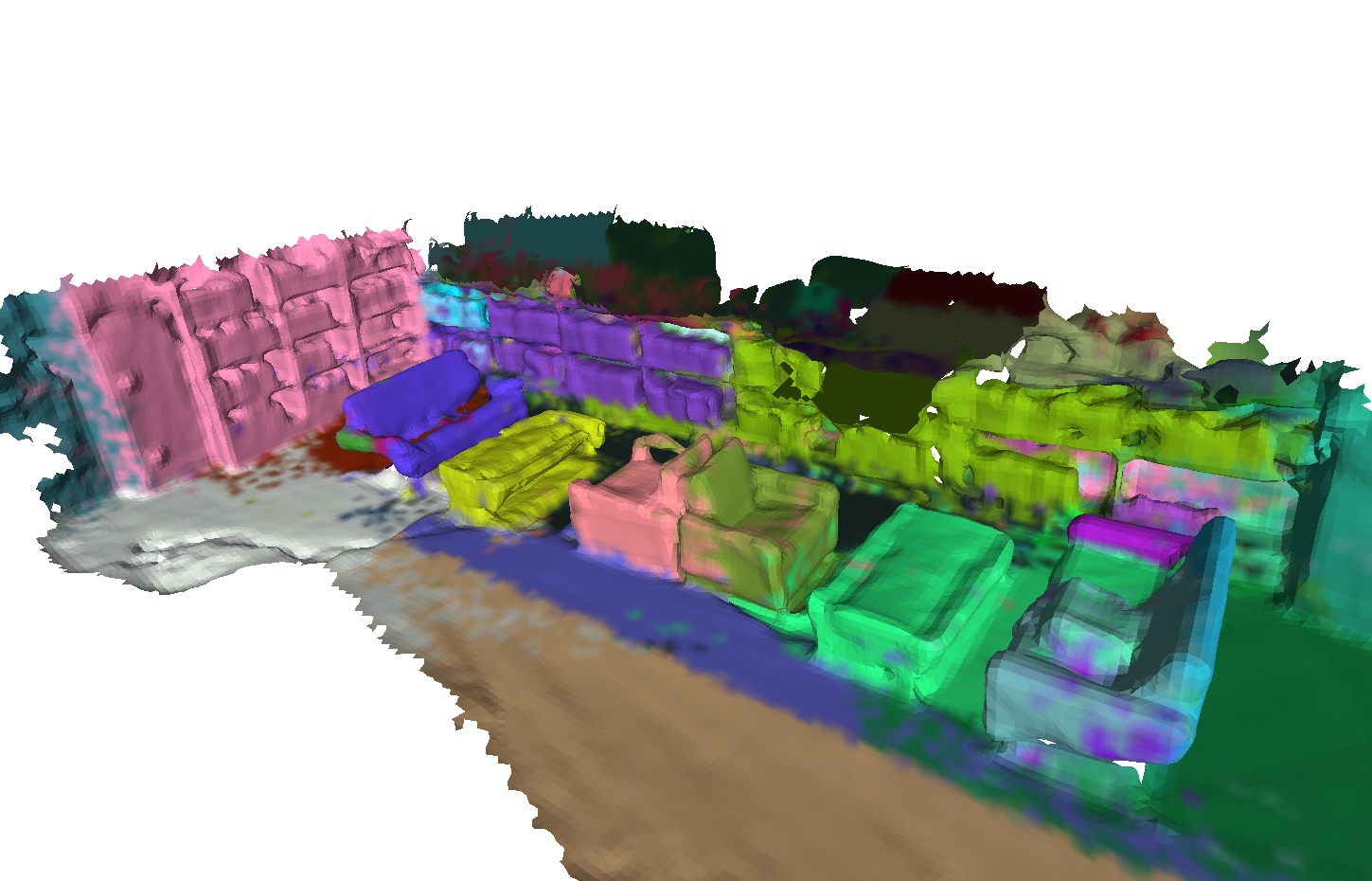

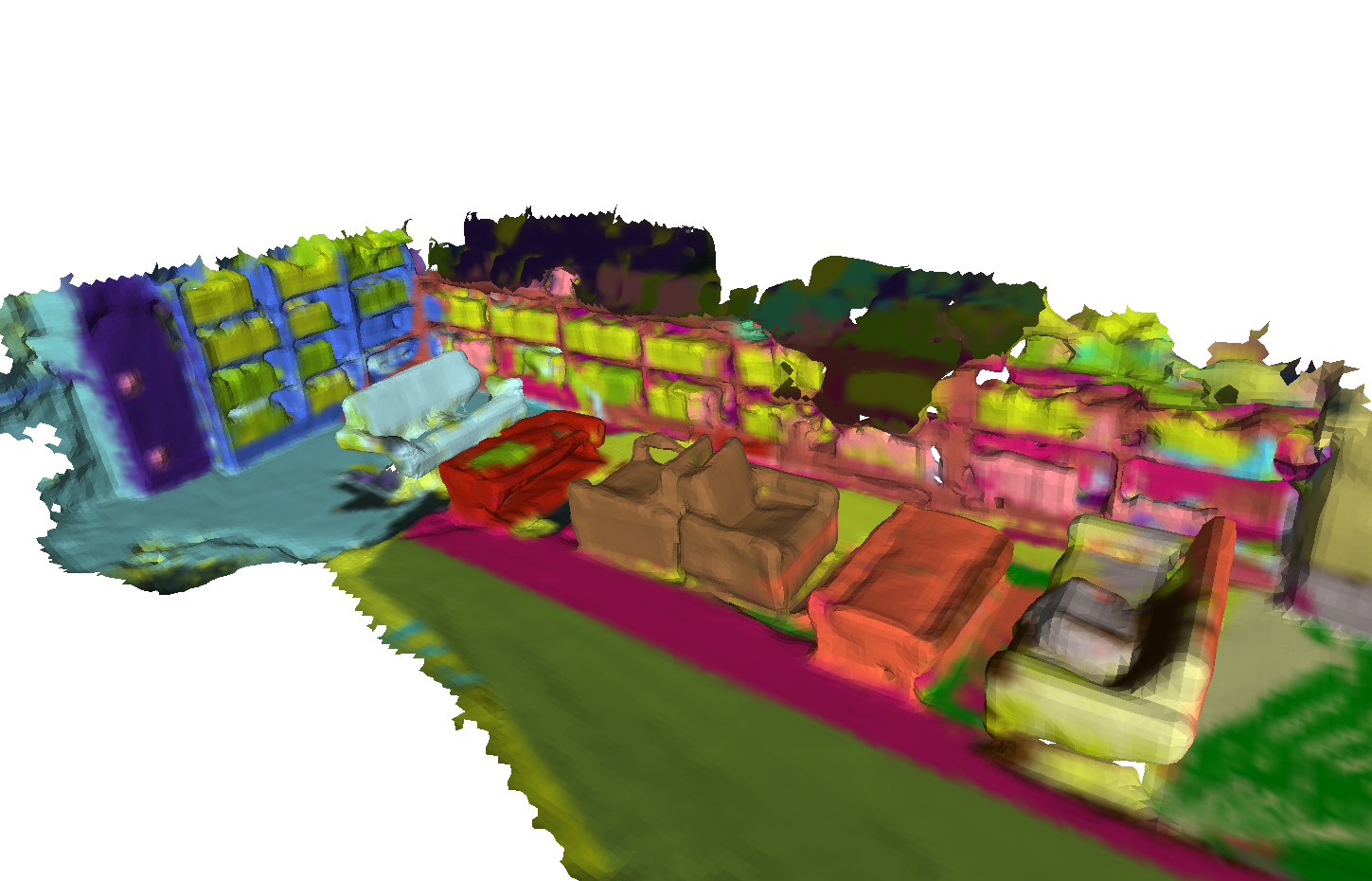

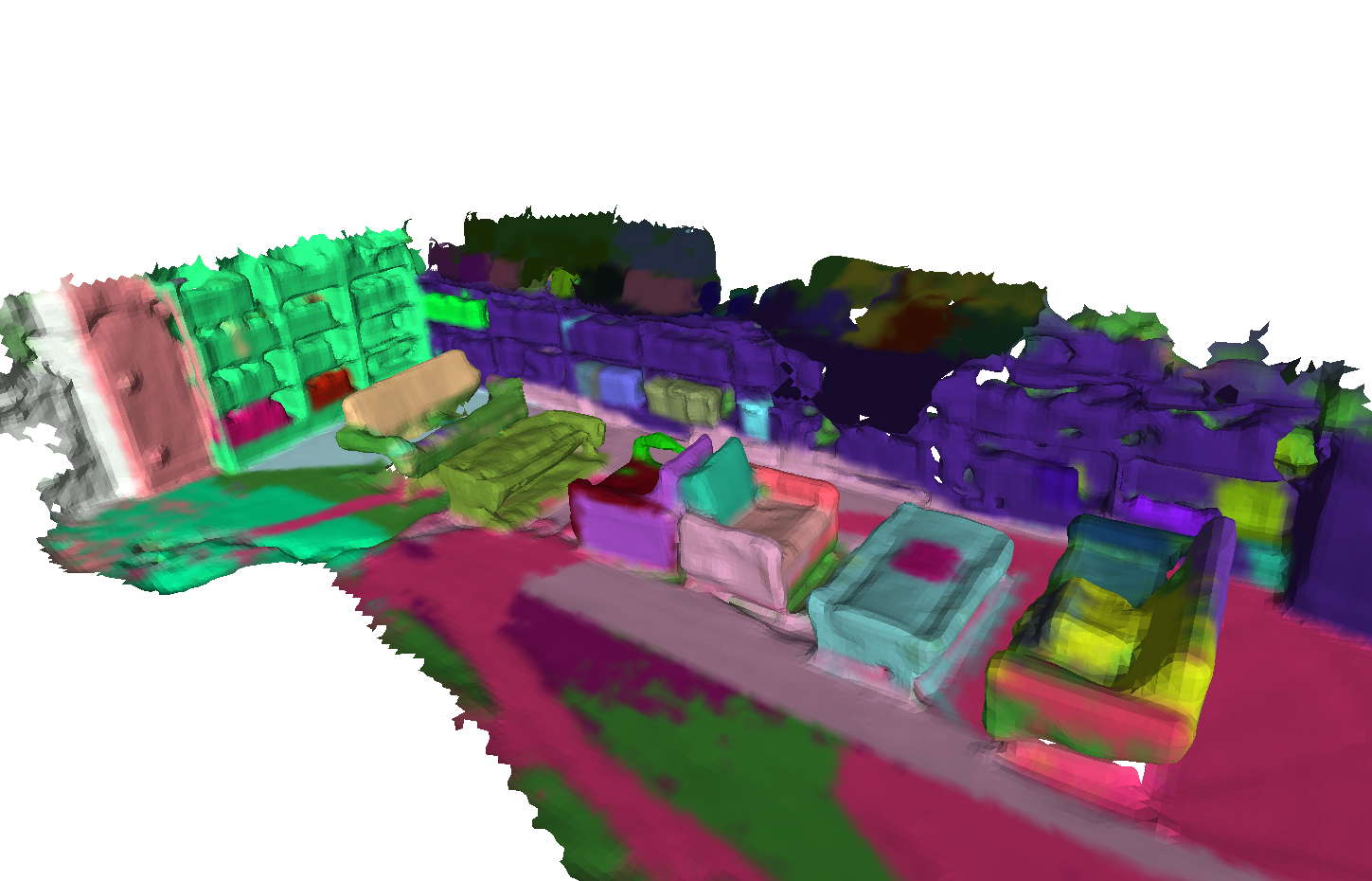

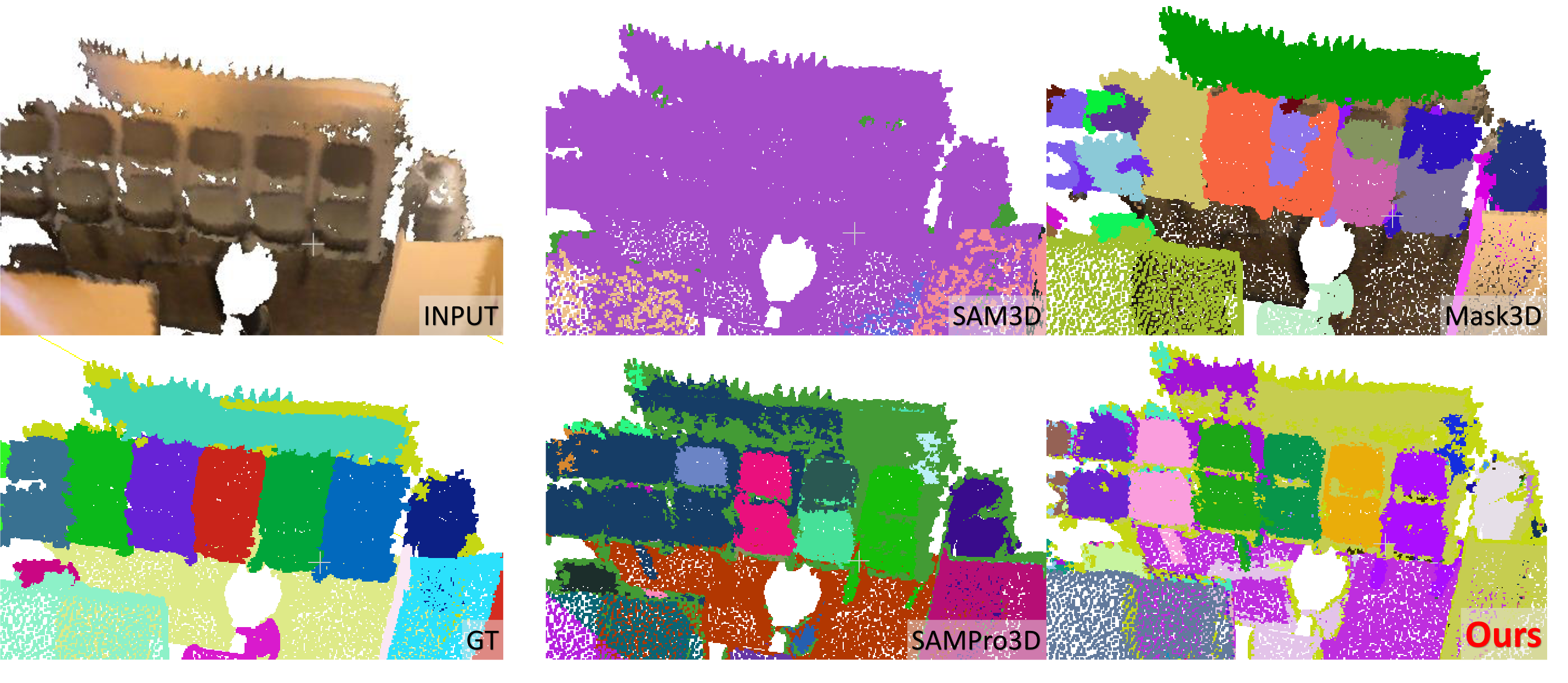

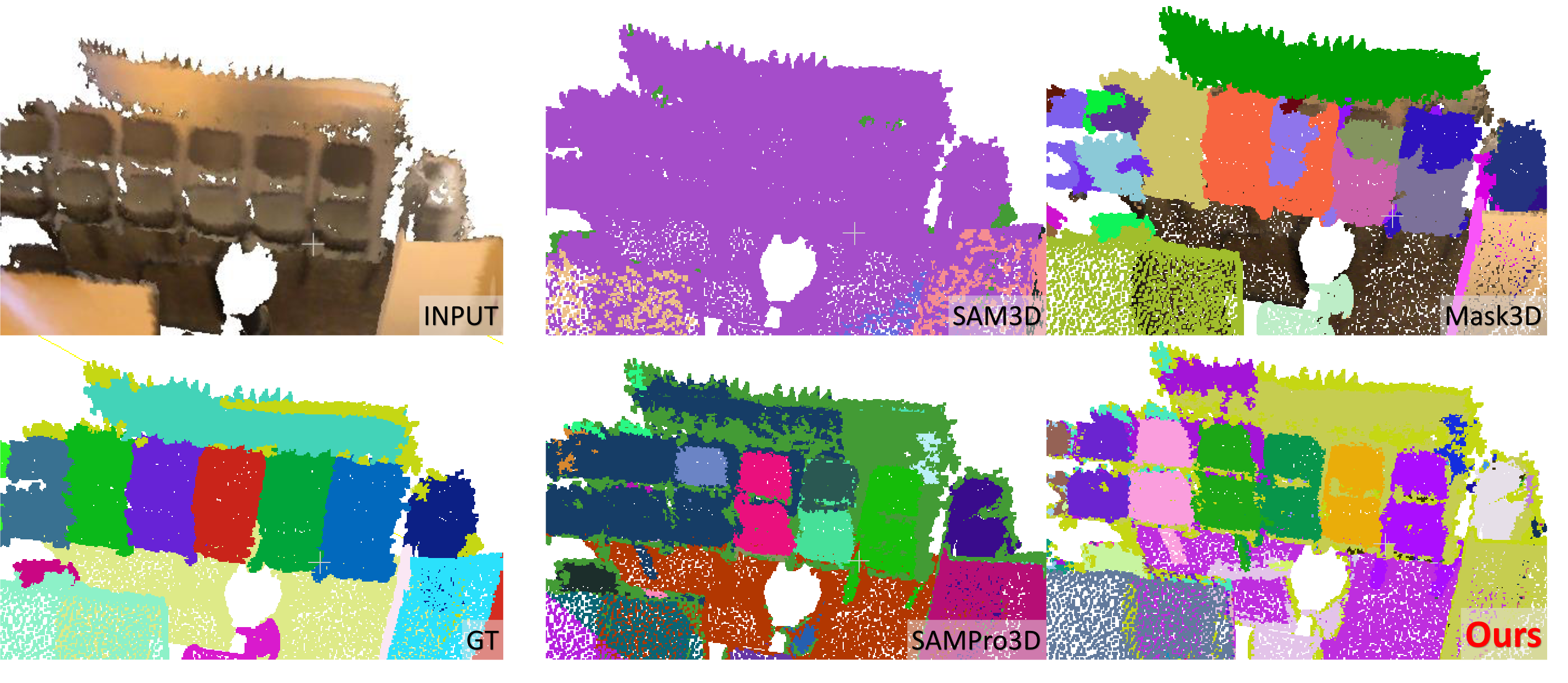

Recognizing objects in the 3D world is a significant challenge for robotics. Due to the lack of high-quality 3D data, directly training a general-purpose segmentation model in 3D is almost infeasible. Meanwhile, vision foundation models (VFM) have revolutionized the 2D computer vision field with outstanding performance, making the use of VFM to assist 3D perception a promising direction. However, most existing VFM-assisted methods do not effectively address the 2D-3D inconsistency problem or adequately provide corresponding semantic information for 3D instance objects. To address these two issues, this paper introduces a novel framework for 3D zero-shot instance segmentation called RE0. For the given 3D point clouds and multi-view RGB-D images with poses, we leverage the 3D geometric information, projection relationships, and CLIP semantic features. Specifically, we utilize CropFormer to extract mask information from multi-view posed images, combined with projection relationships to assign point-level labels to each point in the point cloud, and achieve instance level consistency through inter-frame information interaction. Then, we employ projection relationships again to assign CLIP semantic features to the point cloud and achieve aggregation of small-scale point clouds. Notably, RE0 does not require any additional training and can be implemented by supporting only one inference of CropFormer and one inference of CLIP. Experiments on ScanNet200 and ScanNet++ show that our method achieves higher quality segmentation than the previous zero-shot methods. Our codes and demos are available at https://recognizeeverything.github.io/, with only one RTX 3090 GPU required.

Zoom in by scrolling. You can toggle the “Single Sided” option in Model Inspector (pressing I key) to enable back-face culling (see through walls).